The NVIDIA Jetson Nano Developer Kit plugs and plays with the Raspberry Pi V2 Camera! Looky here:

Background

Since the introduction of the first Jetson in 2014, one of the most requested features has been Raspberry Pi camera support. The Jetson Nano has built in support, no finagling required.

The Jetson family has always supported MIPI-CSI cameras. MIPI stands for Mobile Industry Processor Interface, the CSI stands for Camera Serial Interface. This protocol is for high speed transmission between cameras and host devices. Basically it’s a hose straight to the processor, there isn’t a lot of overhead like there is with something like say a USB stack.

However, for those folks who are not professional hardware/software developers, getting access to inexpensive imaging devices through that interface has been, let’s say, challenging.

This is for a couple of reasons. First, the camera connection and wiring is through a connector to which most hobbyists don’t have good access. In addition, there’s a lot of jiggering with the drivers for the camera in the Linux kernel along with manipulation of the device tree that needs to happen before imaging magic occurs. Like I said, pro stuff. Most people take the path of least resistance, and simply use a USB camera.

Raspberry Pi Camera Module V2

At the same time, one of the most popular CSI-2 cameras is the Raspberry Pi Camera Module V2. The camera has a ribbon connector which connects to the board using a simple connector. At the core, the RPi camera consists of a Sony IMX-219 imager, and is available in different versions, with and without an infrared filter. Leaving out the infrared filter in the Pi NoIR camera (NoIR= No Infrared) allows people to build ‘night vision’ cameras when paired with infrared lighting. And they cost ~ $25, lots of bang for the buck!

Are they the end all of end all cameras? Nope, but you can get in the game for not a whole lot of cash.

Note: The V1 Raspberry Pi Camera Module is not compatible with the default Jetson Nano Install. The driver for the imaging element is not included in the base kernel modules.

Jetson Nano

Here’s the thing. The Jetson Nano Developer Kit has a RPi camera compatible connector! Device drivers for the IMX 219 are already installed, the camera is configured. Just plug it in, and you’re good to go.

Installation

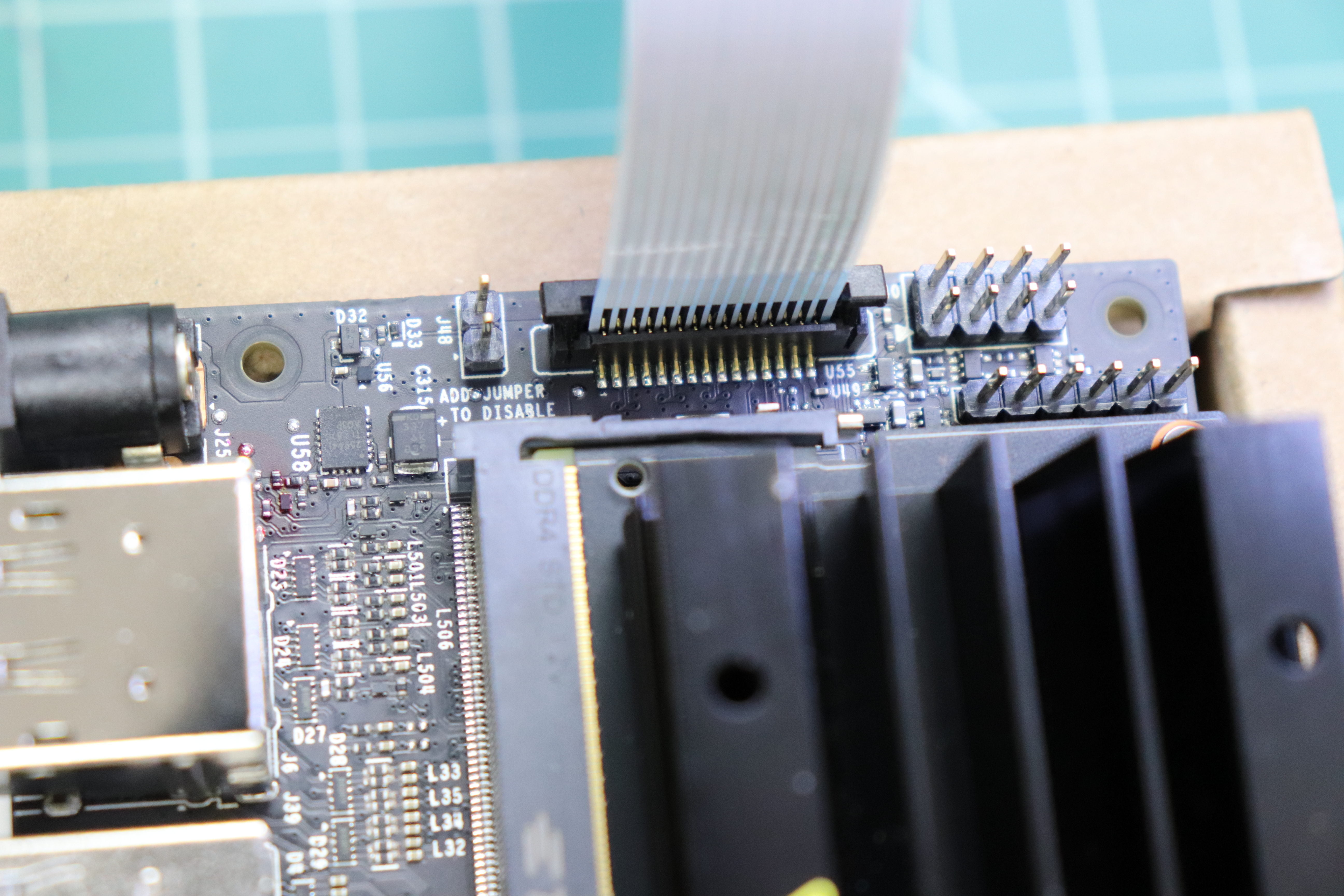

Installation is simple. On the Jetson Nano J13 Camera Connector, lift up the piece of plastic which will hold the ribbon cable in place. Be gentle, you should be able to pry it up with a finger/fingernail. Once loose, you insert the camera ribbon cable, with the contacts on the cable facing inwards towards the Nano module. Then press down on the plastic tab to capture the ribbon cable. Some pics (natch):

Jetson Nano J13 Camera Connector (Closed)

Jetson Nano J13 Camera Connector (Open)

Jetson Nano J13 Connector (with Camera Ribbon Cable)

Make sure that the camera cable is held firmly in place after closing the tab. Here’s a pro tip: Remove the protective plastic film which covers the camera lens on a new camera before use. You’ll get better images (don’t ask me how I know).

Testing and some Codez

The CSI-Camera repository on Github contains some sample code to interface with the camera. Once installed, the camera should show up on /dev/video0. On the Jetson Nano, GStreamer is used to interface with cameras. Here is a simple command line to test the camera (Ctrl-C to exit):

$ gst-launch-1.0 nvarguscamerasrc ! nvoverlaysinkOn newer Jetson Nano Developer Kits, there are two CSI camera slots. You can use the sensor_mode attribute with nvarguscamerasrc to specify the camera. Valid values are 0 or 1 (the default is 0 if not specified), i.e.

nvarguscamerasrc sensor_mode=0

A more specific example, which takes into account the actual modes of the particular sensor:

$ gst-launch-1.0 nvarguscamerasrc sensor_mode=0 ! 'video/x-raw(memory:NVMM),width=3820, height=2464, framerate=21/1, format=NV12' ! nvvidconv flip-method=0 ! 'video/x-raw,width=960, height=616' ! nvvidconv ! nvegltransform ! nveglglessink -e

This requests GStreamer to open a camera stream 3820 pixels wide by 2464 high @ 21 frames per second and display it in a window that is 960 pixels wide by 616 pixels high. The ‘flip-method’ is useful when you need to change the orientation of the camera because if flips the picture around for you. You can get some more tips in the README.md file in the repository.

There are also a couple of simple ‘read the camera and show it in a window’ code samples, one written in Python, the other C++.

Note: Starting with JetPack 4.3/L4T 32.3.1 the Jetson runs OpenCV 4. This means that if you are using an earlier version of JetPack, you will need to select an earlier release of the CSI-Camera repository. In order to do that, before running the samples:

$ git clone https://github.com/JetsonHacksNano/CSI-Camera.git

$ git checkout v2.0

The third demo is more interesting. The ‘face_detect.py’ script runs a Haar Cascade classifier to detect faces on the camera stream. You can read the article Face Detection using Haar Cascades to learn the nitty gritty. The example in the CSI-Camera repository is a straightforward implementation from that article. This is one of the earlier examples of mainstream machine learning.

Notes

Updated 2-29-2020 Simpler camera test, and information on how to access the different CSI cameras on the Jetson Nano B01 carrier board.

A Logitech C920 webcam is used in the video through the Cheese application.

Demonstration environment:

- Jetson Nano Developer Kit

- L4T 32.1.0

- Raspberry Pi Camera Module V2

92 Responses

Wondering if the OV5647 cams (like Raspberry Pi Camera v1) will also be compatible with the #JetsonNano Dev Kit? Would you happen to know?

Thanks in advance

I do not know the answer to your question. NVIDIA seems to stress that the IMX219 kernel module is shipped with the Jetson Nano, I don’t know about the OV5647.

Please ask this question on the official NVIDIA Jetson forum where a large number of developers and NVIDIA engineers share their experience. The forum is here: https://devtalk.nvidia.com/default/board/371/jetson-nano/

Thanks for reading!

I can help, the Raspberry Pi camera is compatible. I did have some issue with some other cameras with night vision extensions .Also web USB cameras also work.

Did you managed it to work, the night vision one ??

I have tried the “original” raspberry v2.1 cam, this one is working.

But a second one called “Raspberry Pi Full HD Kamera Madul” with night vision from electreeks.de just want work at all.

Cheers!

For the default Jetson Nano Image, you must use an IMX219 based sensor, such as the Raspberry Pi 1.2 NoIR camera. The camera you mention is a OV5647 sensor. Thanks for reading!

Thank you clearing this out kanglow!

I have the Raspberry Pi Noir Kamera-Modul V2 connected and working, unfortunately the pictures are blue in the middle and red outside.

maybe it is just broken…

funny thing is, nvidia AI says “jelly fish” all the time

haha..

I’m glad you got it to work. Thanks for reading!

I’m currently working on a project using the Jetson Nano 2GB Developer Kit and the Raspberry Pi Camera Board v2 (CSI 8-megapixel Sony IMX219). I encountered an issue while trying to check if the CSI camera is working using the following command:

nvgstcapture-1.0

However, when I run this command, a white window appears instead of the expected camera feed. I’ve double-checked the connections and configurations, but I’m still facing this problem.

Has anyone else experienced a similar issue, or does anyone have suggestions on how to troubleshoot and resolve this problem? Any insights or guidance would be greatly appreciated.

@kangalow help out the issue

I don’t know what the issue is. There could be a variety of problems, incorrect installation, bad cable, bad camera. Please ask for help on the official NVIDIA Jetson 2GB Nano forum, where a large group of developers and NVIDIA engineers share their experience: https://forums.developer.nvidia.com/c/agx-autonomous-machines/jetson-embedded-systems/jetson-nano/76

Good luck on your project!

Awesome article! Thanks. Would you happen to know the gstreamer command line to operate a second CSI camera on the nano?

Thank you for the kind words. I don’t know the sequence, but there are camera experts on the Nano forum. Please ask this question on the official NVIDIA Jetson forum where a large number of developers and NVIDIA engineers share their experience. The forum is here: https://devtalk.nvidia.com/default/board/371/jetson-nano/

Thanks for reading!

Hi there!

I tried to do it like you! And thanks for your time and work to make this report. But I got some Issues and dont know how to solve it cause I am fairly new to Linux.

I think thats what causes my trouble:

(Argus) Error FileOperationFailed: Connecting to nvargus-daemon failed: Die Struktur muss bereinigt werden (in src/rpc/socket/client/SocketClientDispatch.cpp, function openSocketConnection(), line 201)

(Argus) Error FileOperationFailed: Cannot create camera provider (in src/rpc/socket/client/SocketClientDispatch.cpp, function createCameraProvider(), line 102)

Error generated. /dvs/git/dirty/git-master_linux/multimedia/nvgstreamer/gst-nvarguscamera/gstnvarguscamerasrc.cpp, execute:515 Failed to create CameraProvider

FEHLER: Von Element /GstPipeline:pipeline0/GstEglGlesSink:eglglessink0: Output window was closed

It would be great if someone can tell me what I have to take care with ..

Greetings Nico

This is difficult for me to debug. I don’t know what application code you are calling, and I don’t know German. Please ask this question on the official NVIDIA Jetson forum where a large number of developers and NVIDIA engineers share their experience. The forum is here: https://devtalk.nvidia.com/default/board/371/jetson-nano/

Thanks a lot i could fix it by taking another sd-card something went wrong with it… now everything works like described…

I’m glad you got it to work!

Thanks for great work!

I would like to connect my custom camera to jetson nano. My question is for connecting a different camera to nano. Can you tell me which kernel file be edited for a different camera. I have all the data for my customly made camera. I think it is not impossible to do that.

Thank you for the kind words. There may be several things that you have to do, depending on which type of sensor you are using in your camera. This is a question beyond which can be answered here. Please ask this question on the official NVIDIA Jetson forum where a large number of developers and NVIDIA engineers share their experience. The forum is here: https://devtalk.nvidia.com/default/board/371/jetson-nano/

You have great tutorials, I really appreciate them!

When I run the gst-launch command in the terminal it works fine, no problem. But when I tried your python example (simple_camera.py) it could not open the camera. I found out that the pre-installed opencv-3.2.0 was built without gstreamer support:

Python 3.6.7 (default, Oct 22 2018, 11:32:17)

[GCC 8.2.0] on linux

Type “help”, “copyright”, “credits” or “license” for more information.

>>> import cv2

>>> print(cv2.getBuildInformation())

…

GStreamer: NO

I used a newly flashed SD card with Jetpack. Do you have any idea on how to proceed?

Thank you for the kind words. Did you try with Python 2.7? For Python 3.6, there are a lot of steps needed to install for OpenCV, it is possible that the original OpenCV was overwritten.

According NVIDIA Forum, V1 Camera will not work on Jetson Nano. https://devtalk.nvidia.com/default/topic/1049605/jetson-nano/-raspberry-pi-version-1-camera-does-not-work/post/5327152/#5327152

I’m trying to make it work with no success. I bought a V2 version and voilá !!!

I am glad you got it to work. Thanks for reading!

In this article you use the camera utility gst-launch-1.0. In several of the Nvidia demos they use another utility nvgstcapture-1.0.

Can you explain the differences? I am very new to Linux so this may be obvious to more experienced users

gst-launch is reading from the camera and sending it to the display. On the other hand, nvgstcapture is an application that uses gstreamer and omx to capture video and save it to a file through the onboard hardware encoders. Here’s a deeper explanation: https://developer.ridgerun.com/wiki/index.php?title=Gstreamer_pipelines_for_Jetson_TX1

Thanks for reading!

hello,

thank you for all your tutorials,they really help me a lot!

I’m a TX2 user and wonder if PRI v2 cam can work on my TX2 with the latest Jetpack 4.2 version?

I have a Auvidea J20 board ,a 6-csi-cam-add-on module.When I plug everything on my TX2 dev-kit and make some “sudo”-command(5 i2cset command) as Auvidea reference manual said.The GPIO light switch on but still no “/dev/video”.

I found someone having the same problem with J20 and PRIV2cam,and some questioned on nvidia Forums(mainly on 2017 and 2018).Still I don’t get much useful help.

I know that Ridgerun have done these things and nicely write a wiki on how to make it work.But it costs 2,499$ to buy their drivers.That’s far from i can afford.I don’t know what should I do now.

I’m appreciated for all the advise or guide.

John

I do not have any experience with the Auvidea J20 boards. Other than contact the vendor that sells you product, and the NVIDIA forums, I don’t have any further suggestions. Good luck on your project!

Thank you anyway.

found another camera for the nano-board.

i ordered it today. may something for you to try also?

https://www.e-consystems.com/nvidia-cameras/jetson-nano-cameras/3mp-mipi-camera.asp

there is a product video on youtube

python3-picamera library works for Jetson nano or not? or Gstreamer is the alternative of python3-picamera library?

pi-camera is for the Raspberry Pi. Typically people use GStreamer on the Jetson to interface with the CSI camera.

Thanks for the great tutorial! Do you have any commands handy to stream this video over an SSH X11 connection?

In case this helps anyone trying to stream over X11 to a remote desktop:

ssh -X $USER_NAME@$NANO_IP -C “gst-launch-1.0 nvarguscamerasrc ! ‘video/x-raw(memory:NVMM),width=3820, height=2464, framerate=21/1, format=NV12’ ! nvvidconv flip-method=2 ! ‘video/x-raw,width=960, height=616’ ! nvvidconv ! ximagesink”

Any thoughts on why a Raspberry Pi cam 2.1 is very red and grainy? simple_camera and face-detect work.

Regards, Geordy

Is it a RPi camera, or a clone? Please take a look at this in the Jetson Nano forum, and follow up with questions there: https://devtalk.nvidia.com/default/topic/1053722/jetson-nano/support-for-waveshare-imx-219-wide-angle-camera/

Hi.I try this code but not work.Can you help me.

rancer91@rancer91-desktop:~/CSI-Camera$ python simple_camera.py

File “simple_camera.py”, line 27

print gstreamer_pipeline(flip_method=0)

^

SyntaxError: invalid syntax

I have downloaded the github files that you instructed us to download however when i run the gstream code it pops up a blank screen and says no camera detected, may i ask if you know why is this happening?

Your issue could be the result of multiple causes. First to check is which camera are you using? The second is to make sure that the camera is installed correctly. Does the following work:

$ gst-launch-1.0 nvarguscamerasrc ! ‘video/x-raw(memory:NVMM),width=3820, height=2464, framerate=21/1, format=NV12’ ! nvvidconv flip-method=0 ! ‘video/x-raw,width=960, height=616’ ! nvvidconv ! nvegltransform ! nveglglessink -e

Please I need help concerning a CSI camera I bought on Amazon https://www.amazon.com/Yahboom-IMX219-77-Camera-NVIDIA-Interface/dp/B07TS7NCP2

. It has out of the box Nvidia-Jetson Nano support so I connected it as shown. It even shows up when running `ls /dev/video0`

but when running the example shown on https://github.com/JetsonHacksNano/CSI-Camera it merely screenshots my screen as opposed to streaming a video

The command specified is

gst-launch-1.0 nvarguscamerasrc ! ‘video/x-raw(memory:NVMM),width=3820, height=2464, framerate=21/1, format=NV12’ ! nvvidconv flip-method=0 ! ‘video/x-raw,width=960, height=616’ ! nvvidconv ! nvegltransform ! nveglglessink -e

Is the video size you specified: 3280×2464 a video format for your camera? As specified in the Github repository README, you can check the camera image formats using:

It is not. On checking the image formats though, I switched to a supported one and I still get the same behaviour but the screenshot is a smaller size

Have you looked in the logs to see if there is an error? e.g.

This actually worked. Discovered that the camera was the issue. Thanks!

I am glad you got it to work.

Hi

Is there anyway that I can capture RGB images through CSI with 10bit RAW RGB?

Thanh

Please ask this question on the official NVIDIA Jetson forum where a large number of developers and NVIDIA engineers share their experience. The forum is here: https://devtalk.nvidia.com/default/board/371/jetson-nano/

kangalow can you please tell me how can i record video using my resberry pi camera mversion v2 i spend almost a day but cant find the way to record a video through v2 camera.

Please ask this question on the official NVIDIA Jetson forum where a large number of developers and NVIDIA engineers share their experience. The forum is here: https://devtalk.nvidia.com/default/board/371/jetson-nano/

shubh@shubh-desktop:~$ gst-launch-1.0 nvarguscamerasrc ! ‘video/x-raw(memory:NVMM),width=3820, height=2464, framerate=21/1, format=NV12’ ! nvvidconv flip-method=0 ! ‘video/x-raw,width=960, height=616’ ! nvvidconv ! nvegltransform ! nveglglessink -e

Setting pipeline to PAUSED …

Using winsys: x11

Pipeline is live and does not need PREROLL …

Got context from element ‘eglglessink0’: gst.egl.EGLDisplay=context, display=(GstEGLDisplay)NULL;

Setting pipeline to PLAYING …

New clock: GstSystemClock

Error generated. /dvs/git/dirty/git-master_linux/multimedia/nvgstreamer/gst-nvarguscamera/gstnvarguscamerasrc.cpp, execute:532 Failed to create CaptureSession

Got EOS from element “pipeline0”.

Execution ended after 0:00:00.083059046

Setting pipeline to PAUSED …

Setting pipeline to READY …

Setting pipeline to NULL …

Freeing pipeline …

There’s nothing to go on here. What camera are you using? Is it installed correctly? Does it show up in /dev ?

Is the image quality on these cameras just this low, or are there some settings that can be tweaked?

I don’t know what you mean. Which camera are you talking about, and what type of image quality are you expecting? Do you actually have a camera that you are comparing it against, or have one of the cameras on your Jetson? Are you comparing this to a Raspberry Pi running the same camera?

I just received another new nano and it is a little different than the others. TWO CAMERA CONNECTORS for raspi v2. Stereo vision!

Hello! Thanks for putting this tutorial together, it’s very easy to follow! I did have a question for you though.

I bought an enclosure for the jetson nano and, the way it’s designed, I have the option of having my camera (rpi V2) face backwards or flip it upside down. Looking through your documentation (your readme file), it appears it should be easy enough to change flip_method to 2 and it should flip it 180 degrees but, unfortunately, the whole thing hangs when I attempt this and I have to kill the process through process manager. If I change it back to 0 though, everything works again.

Below is the code I’m using:

gst-launch-1.0 nvarguscamerasrc ! ‘video/x-raw(memory:NVMM),width=3820, height=2464, framerate=21/1, format=NV12’ ! nvvidconv flip-method=2 ! ‘video/x-raw,width=960, height=616’ ! nvvidconv ! nvegltransform ! nveglglessink -e

Am I missing something really obvious? Thanks for all your help!

The command appears to be correct. The first thing I would check is if there’s an extra carriage return/line feed in your string. You might try: https://developer.ridgerun.com/wiki/index.php?title=Jetson_Nano/Gstreamer/Example_Pipelines/Transforming#Rotation

Hi! First of all I would want to thank you for your awesome content. I was directed here from a medium article I was reading on the jetson nano and good Lord it was nice to finally a step-by-step instruction guide. So far I’ve managed to get everything you’ve offered going and I only wish I got here earlier.

Now I only have one question, which I’m asking here because It seems like its most content relevant and others might benefit. I’ve tried to search for the answers on the Nvidia forum but clearly I need to be working on my search terms.

So I don’t know if you have noticed this but OpenCV doesn’t gracefully exit when running face_detect.py. A hint of this is that GST_ARGUS is stuck at PowerServiceHWVic::cleanResources, so probably suggesting that gstreamer is still somehow holding on to resources. I’ve narrowed this down to the classifier.detectMultiScale(img, 1.3, 5) function, which I’m guessing its not freeing up memory, or something.

I’m wondering if you could tell me how to workaround this, or at least give me some hints on how to figure out what’s going wrong

Thank you for the kind words.

The sample is overly simple, and doesn’t have all the error checking/termination code that is needed for “real” situations.

How are you trying to terminate the program? Hitting the escape key is the one that does cap.release()

Hi! I found a memory leak issue when I open and close pipeline again and again on System Monitor of Ubuntu.

When finish the program, Memory is returned to its original state.

Did you know about this issue and have solution?

#include “mainwindow.h”

#include “ui_mainwindow.h”

MainWindow::MainWindow(QWidget *parent) :

QMainWindow(parent),

ui(new Ui::MainWindow)

{

ui->setupUi(this);

timer = new QTimer(this);

}

MainWindow::~MainWindow()

{

delete ui;

}

void MainWindow::on_pushButton_open_webcam_clicked()

{

QString strPipline = QString(“nvarguscamerasrc ! video/x-raw(memory:NVMM), width=(int)%1, height=(int)%2, format=(string)NV12, framerate=(fraction)%3/1 ! nvvidconv flip-method=%4 ! video/x-raw, width=(int)%5, height=(int)%6, format=(string)BGRx ! videoconvert ! video/x-raw, format=(string)BGR ! appsink sync=true”)

.arg(1920)

.arg(1080)

.arg(30)

.arg(0)

.arg(ui->label->size().width())

.arg(ui->label->size().height());

cap.open(strPipline.toStdString(), CAP_GSTREAMER);

if(!cap.isOpened()) // Check if we succeeded

{

cout << "camera is not open" << endl;

}

else

{

cout << "camera is open" <start(20);

}

}

void MainWindow::on_pushButton_close_webcam_clicked()

{

disconnect(timer, SIGNAL(timeout()), this, SLOT(update_window()));

cap.release();

Mat image = Mat::zeros(frame.size(),CV_8UC3);

qt_image = QImage((const unsigned char*) (image.data), image.cols, image.rows, QImage::Format_RGB888);

ui->label->setPixmap(QPixmap::fromImage(qt_image));

ui->label->resize(ui->label->pixmap()->size());

cout << "camera is closed" <> frame;

cvtColor(frame, frame, CV_BGR2RGB);

qt_image = QImage((const unsigned char*) (frame.data), frame.cols, frame.rows, QImage::Format_RGB888);

ui->label->setPixmap(QPixmap::fromImage(qt_image));

ui->label->resize(ui->label->pixmap()->size());

}

Does the memory leak happen to be the size of two QImages that you allocate when you close the pipeline?

Thank you for your series on Jetson Nano.

I am using camera IMX219-77 IR for my Jetson Nano. I followed the instruction but the video has purple-like color.

What is the reason of that and how can I obtain normal color video?

Many thanks,

I do not understand your question. You are using a camera without an infrared filter. If you do not use an infrared filter, the images can appear purple. What are your expectations here?

Thank you for your article!

I try to set a lower framerate (For example: framrate=10/1) but it still run with framerate=59.

Could you help me?

Thank you very much.

Hard to comment without seeing the exact setting you use.

This works fine.

But how to launch a camera in Cheese or Web-Browsers?

These cameras do not have v4l2 drivers by default. In order to work around this, you would have to create a v4l2 loopback device and then use gstreamer to sink to the that device. Thanks for watching!

Would you please help me out? After I accidentally pull out the pi camera while the Jetson Nano is running, the screen fonts and outlay is enlarged. What damage would have been inflicted upon? And how to check the source of the problems and fix them?

I do not have anything to share on your issue. Please ask this question on the official NVIDIA Jetson Nano forum where a large group of developers and NVIDIA engineers share their experience.

Hi @kangalow,

First of all: many thanks for sharing your knowledge and experiences!

I’m working with the first release of the Nano. After setting up a fresh image and connecting the picam v2 the python sample works very well. But compiling the CPP failed because of missing opencv-headers. What needs to be done to get the headers available or setting up the compiler flags correctly?

I cannot tell from your question which version of JetPack/L4T you are running.

I’m using the latest JetPack 4.3 (https://developer.nvidia.com/jetson-nano-sd-card-imager-3231).

I was able to get the cpp-example running by compiling opencv 4. Both CPP and Python 3 are now able to communicate with cv2.

I am glad you were able to get it to work.

Hi, Nice video. I just put together by new Jetson Nano and when I put the cord into the Jetson Nano J13 Camera Connector the plastic on top broke off. Is there a way to get a replacement part? I did not finish loading software so don’t know if the loose cconnection will work.

As I recall there was a post in the Jetson Nano forum about this issue. If you can’t find it, ask the question there again. The forum: https://forums.developer.nvidia.com/c/agx-autonomous-machines/jetson-embedded-systems/jetson-nano/76

Hi all!

I want to use 2 cameras with IN B01 for recording stereo videos. Witch cameras I need to buy with 30 fps (minimal) and maximal resolution and quality? Hope for help =)

There are a variety of vendors offering solutions. A lot depends on your budget and operating environment. Please ask this question on the official NVIDIA Jetson Nano forum, where a large group of developers and NVIDIA engineers share their experience: https://forums.developer.nvidia.com/c/agx-autonomous-machines/jetson-embedded-systems/jetson-nano/76

Hello

May i know how can i set the Raspberry Pi Camera V2 parameters from Jetson Nano?

Here are the complete list of Raspberry Pi V2 camera parameters

https://www.raspberrypi.org/documentation/raspbian/applications/camera.md

Please ask this question on the official NIVIDA Jetson Nano developers forum, where a large group of developers and NVIDIA engineers share their experience: https://forums.developer.nvidia.com/c/agx-autonomous-machines/jetson-embedded-systems/jetson-nano/76

Hello,

First thank you for this great tutorial. I am using V2 Pi NoIR camera and it is working. By the way if Jetson nano goes sleep mode the camera stops working. But it is not a problem. I just want to ask if there are some methods to configure the cam’s exposure time, brightness etc.

Regards

Please ask this question on the official NIVIDA Jetson Nano developers forum, where a large group of developers and NVIDIA engineers share their experience: https://forums.developer.nvidia.com/c/agx-autonomous-machines/jetson-embedded-systems/jetson-nano/76

Hi Jim, love your videos!

But this page has an error. The argument for specifying the CSI camera is ‘sensor_id’, not ‘sensor_mode’. To be clear, sensor_mode is also an argument, but it’s for selecting the mode from a list of modes:

https://docs.nvidia.com/jetson/l4t-multimedia/group__ArgusCameraDevice.html

I have executed all the steps mentioned in the video. There were few errors but I googled it and resolved it. Now there are no errors but there is no output as well, a new window is opening but there is nothing in that.

Could you please tell me what could be the reason and how to resolve it?

Thanx very much in advance!!

I am using arducam IMX447 sensor based camera having resolution of 4032×3040(30 fps).

I wish to get 1348 x 750(240 fps) using pixel binning.

What would be the gstreamer pipeline? or is it even possible to achieve it?

N.B. I am processing the frames in opencv in my jetson nano B01,

i have found pixel binning information here : https://developer.ridgerun.com/wiki/index.php?title=Raspberry_Pi_HQ_camera_IMX477_Linux_driver_for_Jetson

I do not have any experience with the IMX447. Please ask this on the official NVIDIA Jetson Nano forum, where a large group of developers and NVIDIA engineers share their experience: https://forums.developer.nvidia.com/c/agx-autonomous-machines/jetson-embedded-systems/jetson-nano/76

Great video, thanks! Is there any way to connect the Raspberry Pi Camera V2 to a Jetson AGX Xavier? I understand that the connector is different, so I will need an adapter. Has anyone already developed an adapter that I can buy? I also understand that the driver may not be included in the official JetPack distribution, but I’m already somewhat familiar with compiling extra drivers.

The AGX Xavier does not have a direct CSI input like the Jetson Nano and Xavier NX. You can ask for some alternatives on the official NVIDIA Jetson AGX Xavier forum, where a large group of developers and NVIDIA engineers share their experience: https://forums.developer.nvidia.com/c/agx-autonomous-machines/jetson-embedded-systems/jetson-agx-xavier/75

Hi Again 😉

I am working on getting my Pi camera to work properly. The gst streamer command works nicely. Picture is good and without noticeable lag. However, when running the simple-camera.py and especially the face_detect.py, lag is 0.5 to 3 seconds, respectively…

I compared the messages displayed when running the codes with your video and noticed that I have this warning:

[ WARN:0] global /home/nvidia/host/build_opencv/nv_opencv/modules/videoio/src/cap_gstreamer.cpp (933) open OpenCV | GStreamer warning: Cannot query video position: status=0, value=-1, duration=-1

I reinstalled OpenCV, Python 2.7, 3.6 and 3.7. Set 2.7 as default. Nothing helped. Video is still very choppy and laggy…Any hints to what this could be?

BR

Florian

Hi, can you tell me how I can access Jetson Nano RPiV2 camera via SSH connection from window? My file where I opened camera is not compiling. I have to detect lane lines in live video frames.

Thank you if so much.

Hi, can somebody tell me, does the Rasberry Pi Camera Module 3 will support Nvidia Jetson Nano, or is there any additional requirements?

The camera needs a driver installed, the driver must match the version of Jetson Linux installed. This may also depend on the version of the camera that you buy. If you need help, please ask on the official NVIDIA Jetson Nano forum where a large group of developers and NVIDIA engineers share their experience: https://forums.developer.nvidia.com/c/agx-autonomous-machines/jetson-embedded-systems/jetson-nano/76

Great video, thanks a lot!

Instead of Jetson Nano, I only have a Jetson Orin Nano Developer Kit with IMX219 stereo camera (which should be kind of MIPI-CSI(2) Camera). I am wondering if your code in CSI-camera repo also support this?

Here’s an article about the Orin Nano and the Raspberry Pi camera if you think it would help: https://wp.me/p7ZgI9-3Jm

Why my camera can work. But when I type python face_detect.py run the file then it show unable to open camera! Can anyone teach me pls.

From your description, it is difficult to tell what problem you are having. Does the camera work on the other examples? Which camera are you using? Which version of Jetpack?